Posted By

Posted On

June 3, 2025

Large Language Models (LLMs) have leapt off the server and into your pocket. In 2025, the integration of LLMs into mobile apps is not just a novelty—it’s becoming the new standard for intelligent UX.

From personalized assistants to offline AI reasoning, developers now harness the power of LLMs directly in apps, both through cloud APIs and on-device inference. But how exactly are they doing it? And what makes it work at scale, smoothly, and securely?

This article explores practical use cases, design strategies, and the best practices for embedding LLMs into mobile applications today.

Why LLMs in Mobile?

Mobile users expect apps to be fast, intuitive, and increasingly proactive. With LLMs, developers can meet these demands by introducing:

Context-aware chat or instruction interfaces

Natural language form filling or command execution

Real-time summarization or content rewriting

Onboarding and guidance systems that adapt as users explore

Autonomous workflows triggered by simple prompts

In short, LLMs make apps more human.

Cloud vs On-Device: The Deployment Split

There are two common LLM integration strategies:

1. Cloud-Hosted LLMs

Best for apps requiring:

Heavy context processing

Frequent updates from the model provider

Consistent model performance

Complex data fetching or chaining logic

Examples:

Customer support bots in finance apps

AI writing assistants

Language translation with context memory

2. On-Device LLMs

Best for apps requiring:

Low latency or offline functionality

Full data privacy (e.g. health, journaling)

Edge computing performance

Battery-aware usage

Examples:

Smart keyboards with prompt understanding

Offline chat assistants

On-device task summarizers

In 2025, lightweight LLM variants (~1–3B parameters) now run smoothly on mid-tier smartphones. For anything more powerful, hybrid models combine on-device processing with occasional cloud boosts.

2025 Use Cases by Category

Let’s explore specific application examples where LLMs are redefining the experience.

Productivity Apps

Use Case: Summarizing notes, rewriting text, generating emails

LLMs help users capture ideas in shorthand and auto-expand them into readable content

Integrated command prompts allow “/summarize” or “/write professionally” actions

Memory systems track prior notes for context-aware suggestions

Best Practice: Use prompt templates for consistent outputs, and batch generations to save API usage.

Health & Wellness

Use Case: Private mental health journaling or daily reflection

On-device LLMs offer offline support for mood tracking or journaling

Prompts like “how was your day?” lead to full reflections written collaboratively

AI can flag potentially harmful patterns without sharing data to cloud

Best Practice: Prioritize privacy-first model use with local encryption and zero telemetry.

E-Commerce & Shopping

Use Case: Smart product discovery, AI-driven upsell suggestions

LLMs interpret free-form queries like “looking for comfy black running shoes under $100”

Conversational product finders feel more like an expert than a filter

Post-purchase assistants offer re-ordering, tracking, and FAQ support

Best Practice: Fine-tune product-focused prompts for clarity, and use feedback loops to improve accuracy over time.

Education

Use Case: Personalized tutoring inside learning apps

LLMs explain concepts in simpler terms or offer examples when users are stuck

Can adapt tone and difficulty level based on user responses

Encourage independent thinking by prompting the user back instead of answering directly

Best Practice: Use multi-turn dialogue and insert checkpoints that validate understanding.

Travel & Navigation

Use Case: AI itineraries and conversational navigation

Ask: “Plan a day in Istanbul with food spots and museums”

LLM outputs personalized itineraries using real-time data

In-car or map-integrated assistants guide using natural conversation

Best Practice: Cache user preferences (budget, interests, pace) to make LLM responses feel truly custom.

Developer Considerations in 2025

When integrating LLMs in mobile, developers must solve five critical challenges:

1. Latency

LLMs are only as helpful as they are fast. Users won’t wait 8 seconds for a sentence to form. On-device models (like Mistral 7B distilled variants) now offer sub-2s responses for small prompts.

Tip: Use background pre-generation. Start the LLM early when you predict the user will need it.

2. Privacy & Data Handling

Especially in finance, health, or education apps—user inputs must be treated as sensitive data.

Tip: Avoid storing prompts and responses unless encrypted. Use on-device models where regulatory compliance is strict.

3. Prompt Engineering

Most use cases can be powered by a base model and solid prompt design—no finetuning needed.

Tip: Modularize your prompts in code and test them often. Label everything by output tone, length, and function.

4. Caching & Token Management

When using cloud LLMs, repeated prompts cost money.

Tip: Cache response pairs for FAQs, and reduce token usage through content chunking. Only refresh when stale.

5. UI/UX Strategy

LLM features should feel native, not bolted on. Users don’t need to know “AI” is working—just that it’s smooth.

Tip: Use minimal UI—chips, suggestions, smart inputs. Avoid typing simulators unless they’re instant.

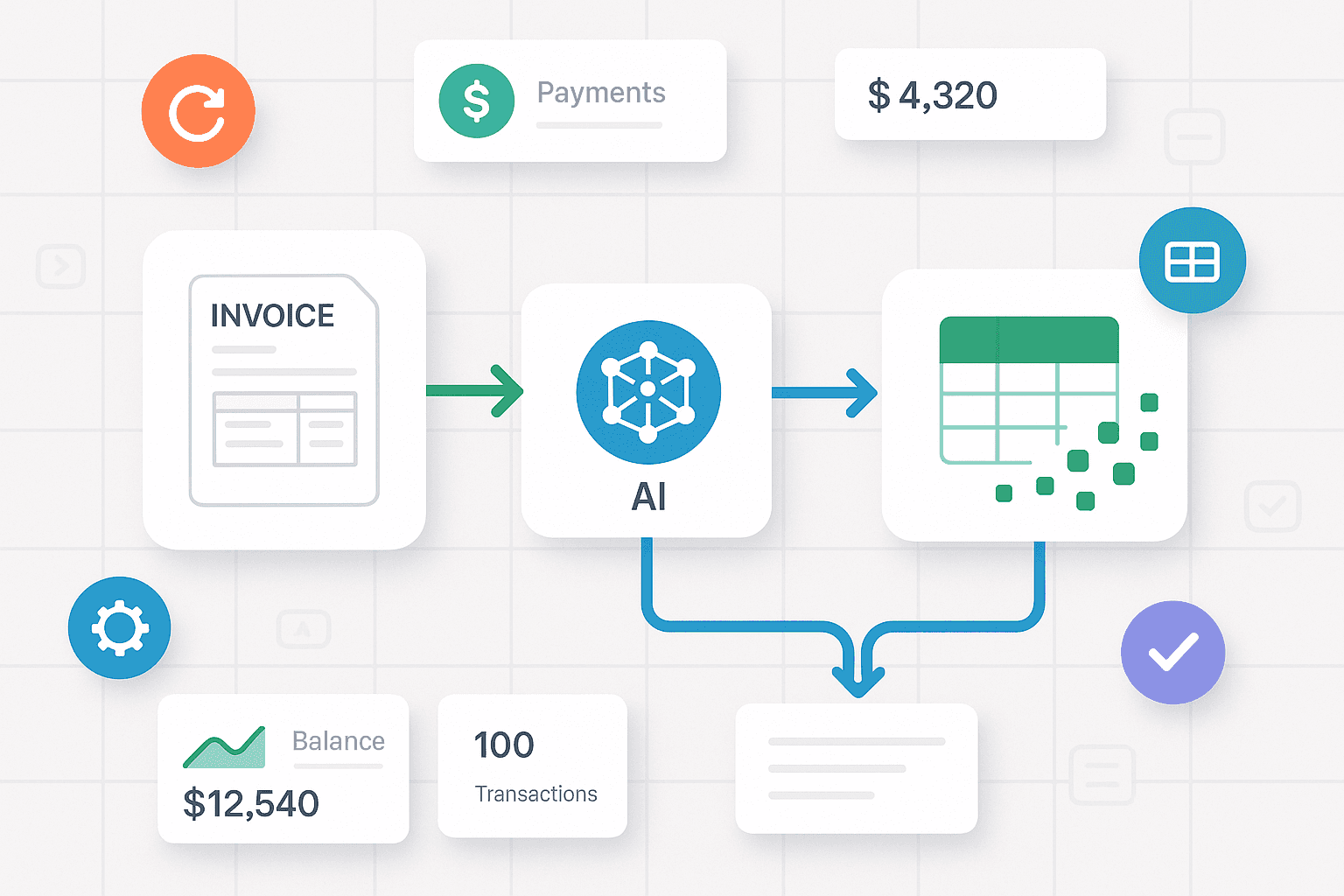

LLMs in Agensync’s Mobile Projects

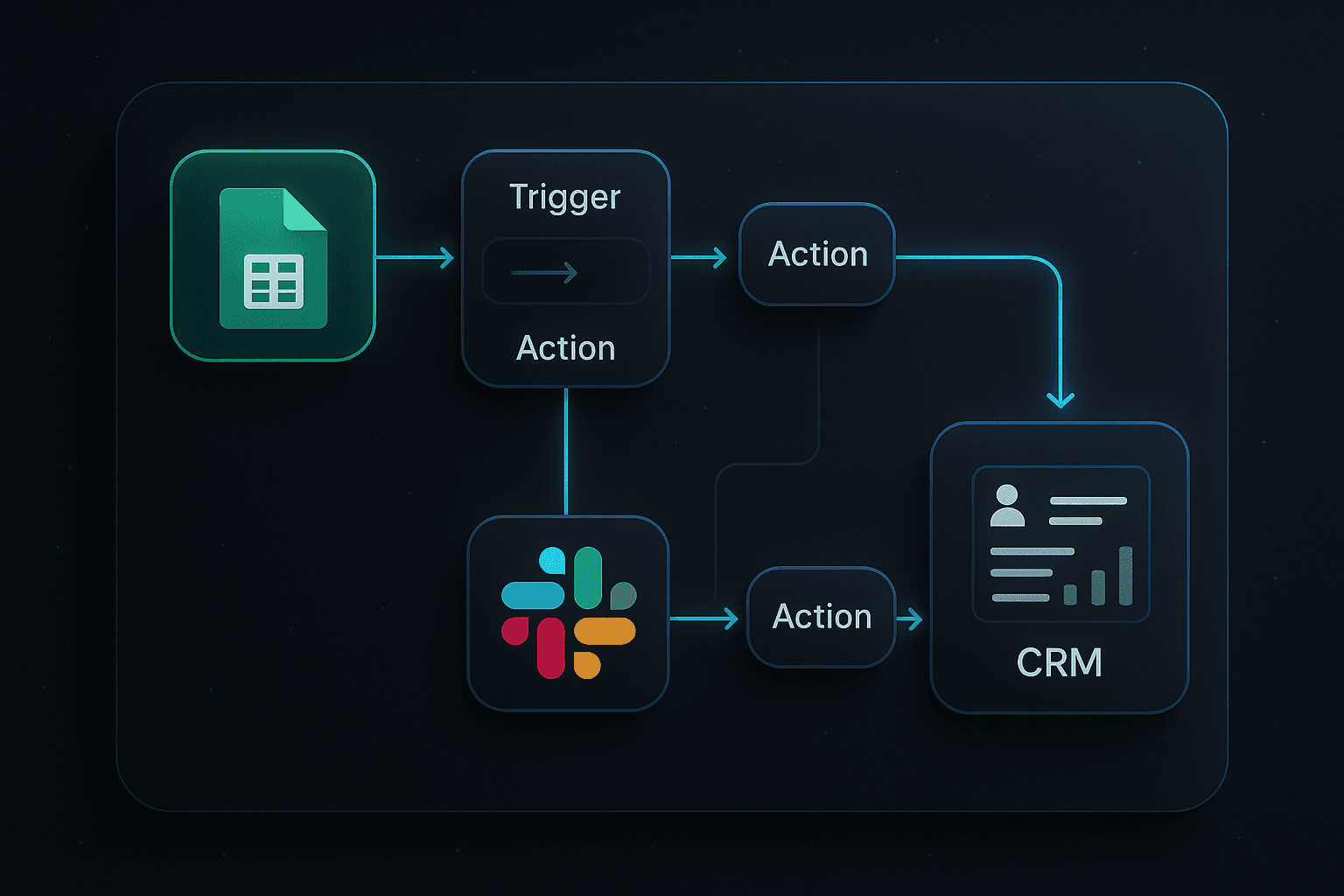

At Agensync, we help startups and enterprises bring LLMs to life in mobile apps. Whether the goal is personalization, automation, or smarter UX, our dev and AI teams work together to:

Identify which features need LLM support

Choose the right deployment model (on-device, cloud, or hybrid)

Build reusable AI pipelines and workflows via n8n or Make.com

Deploy lightweight APIs and mobile-optimized inference engines

Embed features that feel like magic, not a science experiment

Our clients in wellness, ecom, and learning have seen faster user growth and better retention—because their app thinks ahead.

What's Next: Autonomous Agents Inside Apps

LLMs in 2025 are already crossing over from reactive AI to proactive decision-makers. The next wave includes:

Mini AI agents inside apps that handle tasks across screens

Goal-based interfaces (e.g., “Make my morning productive”) where LLMs sequence tasks

Multi-modal input (voice + image + gestures) for richer understanding

Context permanence, where the app “remembers” what you said last week

The future isn’t just about a chatbot. It’s about creating apps that feel alive—because they adapt, respond, and evolve.

Final Thoughts

The LLM-mobile pairing in 2025 is the most powerful developer toolkit yet. It allows you to:

Build human-like interfaces

Offer hyper-personalized content

Increase retention with meaningful automation

Serve users in real time, even offline

Bridge command, intent, and interface into one conversation

The question isn’t “should we use LLMs in our mobile app?”

It’s: “How can we use them wisely, securely, and delightfully?”

At Agensync, we turn that question into answers—through world-class automation, AI workflows, and mobile UX excellence.

Let’s build the app your users won’t want to live without.

Stay Informed with AgenSync Blog

Explore Insights, Tips, and Inspiration

Dive into our blog, where we share valuable insights, tips, and inspirational stories on co-working, entrepreneurship, productivity, and more. Stay informed, get inspired, and enrich your journey with Wedoes.