Posted By

Posted On

June 11, 2025

Search is no longer just typed into a box. In 2025, people speak, snap, or scan to get results. And behind the scenes, AI is decoding those queries—across voice, image, and even video input. If your content isn’t ready for that shift, it won’t be found.

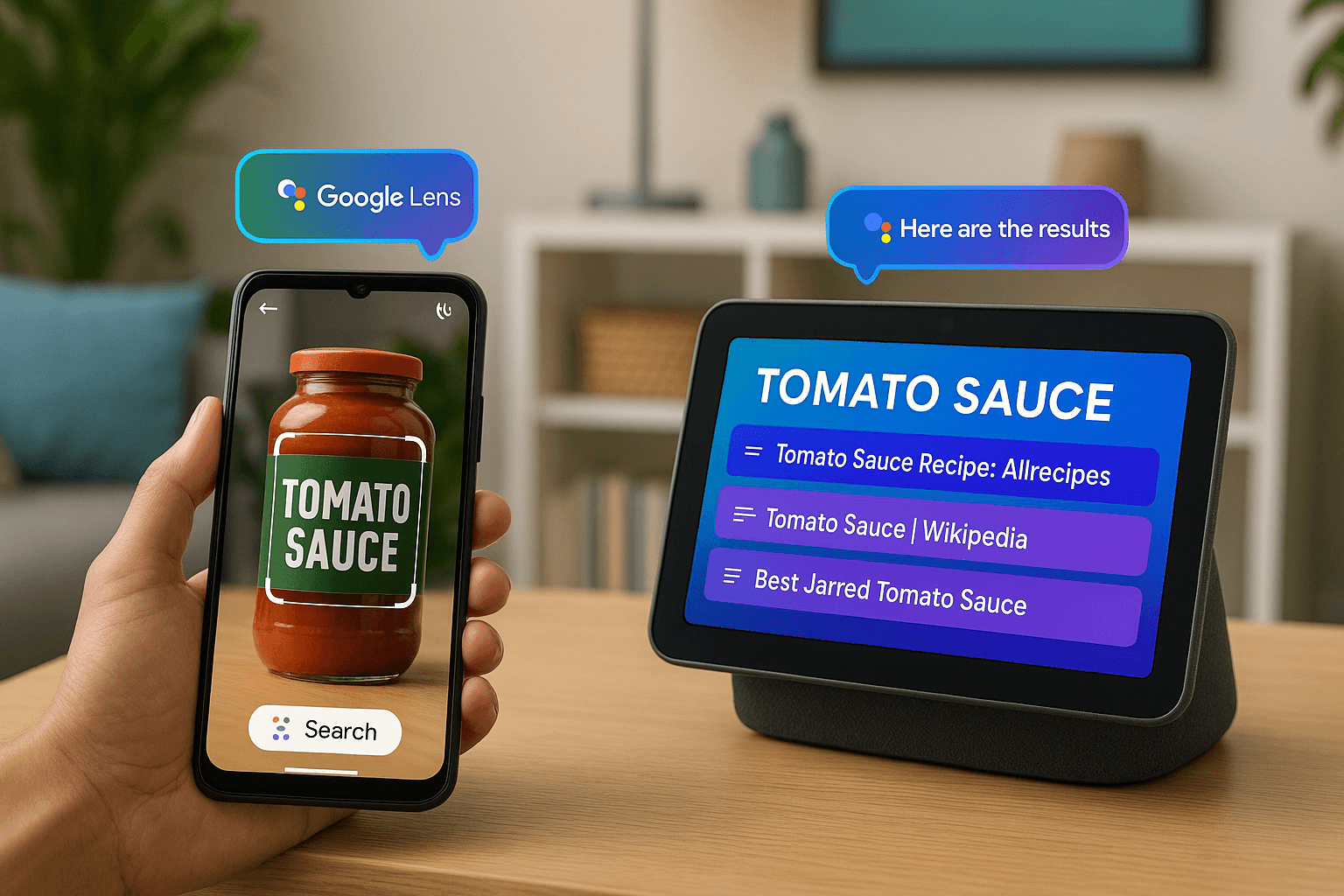

Multimodal search, which includes voice and visual inputs, has become essential to modern SEO. Assistants like Google Assistant, Siri, and Alexa now power voice discovery, while tools like Google Lens enable users to search the web by pointing a camera. For brands, that means the definition of “search visibility” has changed. It's not just about being number one on text-based Google—it’s about being interpretable by multiple senses.

Agensync helps forward-thinking businesses prepare for this new era of discovery. Let’s explore how to optimize for voice and visual AI search using scalable SEO automation and smart content strategy.

The Rise of Multimodal Search

Voice search began as a convenience, but it has evolved into a major segment of queries—especially on mobile and smart home devices. Visual search has grown just as fast, with apps like Google Lens, Pinterest Lens, and integrated camera-based search in e-commerce platforms now common.

These inputs aren’t just novelties. They signal user intent faster and more contextually. Someone asking a voice assistant about “the nearest open café with outdoor seating” or snapping a photo of shoes they like is giving more specific, actionable input than a typed query ever could.

Optimizing for this means your content—and structure—must be machine-readable in new ways.

Structuring for Voice Search

Voice assistants rely on structured data, fast load speeds, clear semantic markup, and natural language content. To get your content read aloud or cited in voice results, here’s what matters:

Your content must sound conversational. Voice search queries are typically full sentences or questions, not keyword-stuffed phrases. Content should reflect that. Think FAQs, how-tos, and answer-first formatting.

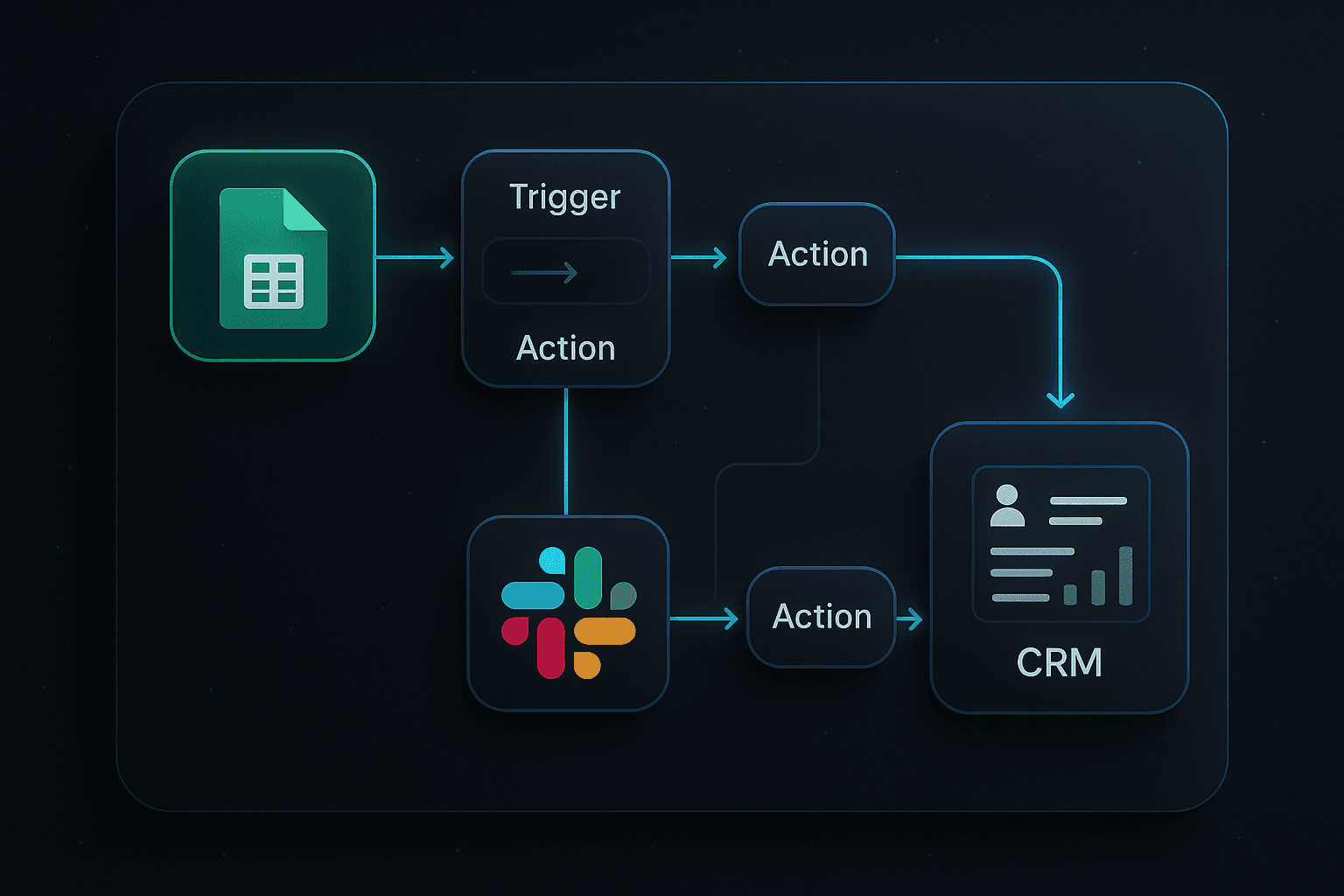

At Agensync, we automate this with structured content blocks. We use Make.com workflows to convert pillar content into Q&A pairs, optimized for voice parsing. These are published using schema markup like FAQPage, HowTo, and Speakable formats.

We also run weekly audits to ensure content is concise, scannable, and clearly answers intent-based queries. This helps smart assistants quickly parse the information and cite it in audio results.

Optimizing for Visual Discovery

Visual AI search depends on what your images and visuals say to algorithms. For example, when Google Lens analyzes a product image, it looks at:

ALT text

Filename semantics

Surrounding text context

Structured product data

Image quality and clarity

So even if you have a stunning product photo, if it’s named “IMG_8454.jpg” and buried in a slow-loading page, it won’t rank in Lens or other visual searches.

We implement Make.com flows that auto-rename images based on content, generate clean alt text, and associate visuals with relevant semantic tags pulled from content clusters. These actions are scalable across hundreds of media files.

By making visuals speak the same language as search engines, we turn every image into a potential entry point.

App Optimization for Voice & Visual Interfaces

If you have a mobile app, you need to think beyond App Store SEO. How discoverable is your app content through Siri or Google Assistant? Can Google Lens recognize app UI or in-app products?

Agensync helps apps implement App Actions (for Android) and Siri Shortcuts (for iOS) so that app content is callable via voice. We structure in-app data using deep linking and metadata so AI tools can index it properly.

On the visual side, screenshots and media must be searchable. We automate tagging and indexing of app visuals so they appear in Lens-style queries when users search for similar UI or features.

Preparing for Contextual Triggers

Multimodal discovery is all about context. Voice queries might come with location, history, or even emotional tone. Visual queries may include lighting, background objects, or spatial relevance.

That’s why modern SEO isn’t just about content—it’s about environment.

We help clients use automation to:

Detect user context from entry points (geo, device, time)

Serve tailored content blocks

Prioritize responses based on predicted intent

All this is orchestrated via automation flows that adapt pages to match multimodal inputs. It’s SEO that responds in real time to real-world cues.

Performance and Accessibility

Fast, accessible, and lightweight pages perform better in both voice and visual search. Voice assistants drop slow-loading sites. Visual tools avoid cluttered pages with non-semantic structures.

At Agensync, we run Make-based audits connected to Lighthouse metrics. These scans detect and alert for performance bottlenecks, missing accessibility tags, and markup errors that reduce visibility across modalities.

You don’t need a full-time dev team. You just need workflows that constantly refine your readiness.

A New Type of SEO Visibility

Voice and visual search open the door to being found in new, more intuitive ways. But only if your content is built to be read, seen, and heard clearly.

Agensync enables businesses to scale this through structured content, real-time automation, and multimodal readiness. We believe the future of SEO isn’t in being louder—it’s in being easier to understand, no matter how someone searches.

If you're ready to be visible through AI lenses and smart speakers alike, now's the time to prepare.

Stay Informed with AgenSync Blog

Explore Insights, Tips, and Inspiration

Dive into our blog, where we share valuable insights, tips, and inspirational stories on co-working, entrepreneurship, productivity, and more. Stay informed, get inspired, and enrich your journey with Wedoes.